Cursor Agent vs. Claude Code

Cursor and Anthropic recently released coding agents within a week of each other. I wanted to learn which was better, so I gave each the same three coding tasks on a production Rails app, and compared their performance across five categories:

- UX

- Code Quality

- Cost

- Autonomy

- Tests & Version Control

Here's what I learned along the way.

You can also check out the YouTube version of this article here. Screen recordings are worth 30,000 words per second:

UX: IDE vs. CLI

Cursor is an IDE.

Claude Code is a CLI.

Cursor's had an agent for a while, but it was called Composer, was a bit experimental, and it was buried in the interface behind chat. Cursor 0.46 renamed Composer to Agent, and promoted it to be the default LLM interface.

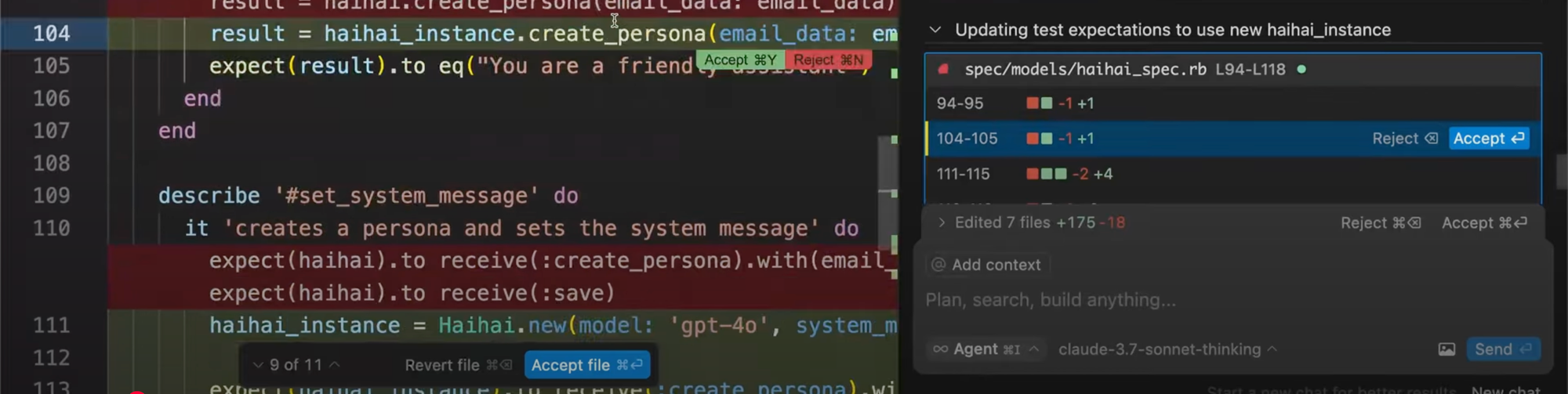

Some elements of the agent design were clunky. At times, there would be up to four different buttons where I could click "Accept."

There were times when I saw a spinning circle, and I thought I was waiting for an LLM response, but Cursor was actually waiting for me to click a button. Other times, terminal commands running in the agent pane needed me to type yes or no, but the terminal display overflowed its narrow window because it was confined to 1/3 of the screen, so I had to go hunting for why the agent had silently locked up.

All of these UX pains are symptoms of cramming agent interactions into 1/3 of the screen under the base assumption that "this is an IDE and 2/3 of the screen needs to be reserved for file editing."

And yes, of course, I should be reviewing all the file changes line by line... But I not. The diffs were coming fast and furious, a bunch of different file tabs were opening, I wasn't sure where to click to approve one vs. approve all or if I could make small changes without screwing up all the agent's work. I felt overwhelmed and it was easier to just keep clicking apply all.

And yet, it... just sorta worked?

Candidly, now that the agent was doing so much for me. I found myself saying, "Do I really need the file editor to be the primary focus?"

(For what it's worth, for nearly my entire life I've had either QuickBASIC, Turbo Pascal, Borland C++, Notepad++, Dreamweaver, TextEdit, Sublime, or VS Code running on my computer. I'm as uncomfortable with what I just wrote as you are.)

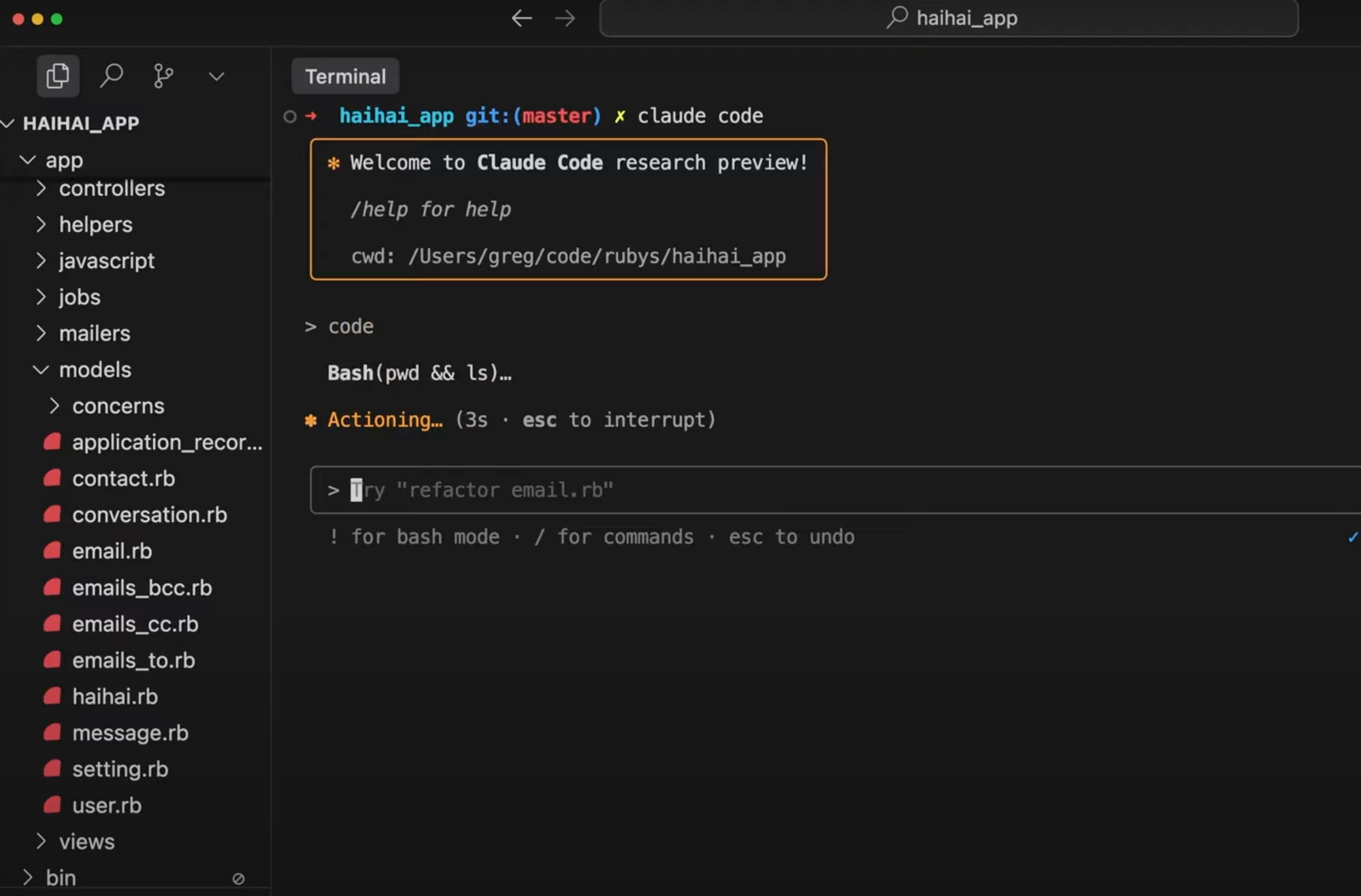

By contrast, Claude Code is a CLI. You run the command in the root of your project, and it examines your codebase and asks what you want help with. You tell it what to do, and then it simply asks a series of yes/no questions as it comes up with commands.

You just have that terminal window. A single pane. At no point are you seeing files open up and close, though you could see and approve the diffs in single file as they rolled by.

Since the agent was my primary way of interacting with the code base, that single pane felt like a better interface.

When it comes to UX, I prefer Claude Code's CLI.

Code Quality

A little bit about the coding tasks I assigned the agents:

I have a Rails app that serves as an email wrapper for GPTs. I have several email bots set up (edit@ review@ recipes@ etc.) each with its own system message, conversation tracking, etc. If you want to try it out, forward an email to roast@haihai.ai it will roast your email and reply back to you.

There's enough complexity in this app that I hadn't touched it in nine months because of the effort required to load the context into my brain. These agents felt like a good opportunity to get some momentum on a stalled project.

These were the top three issues on my backlog:

- Cleanup my tests and dependencies to fix some deprecation warnings

- Replace Langchain with direct calls to OpenAI's APIs

- Add support for Anthropic

I ran through these changes with Cursor Code, reverted, and ran through them again with Cursor Agent.

Both agents successfully completed the three tasks, and did so in remarkably similar ways, likely because Claude 3.7 Sonnet was powering both agents.

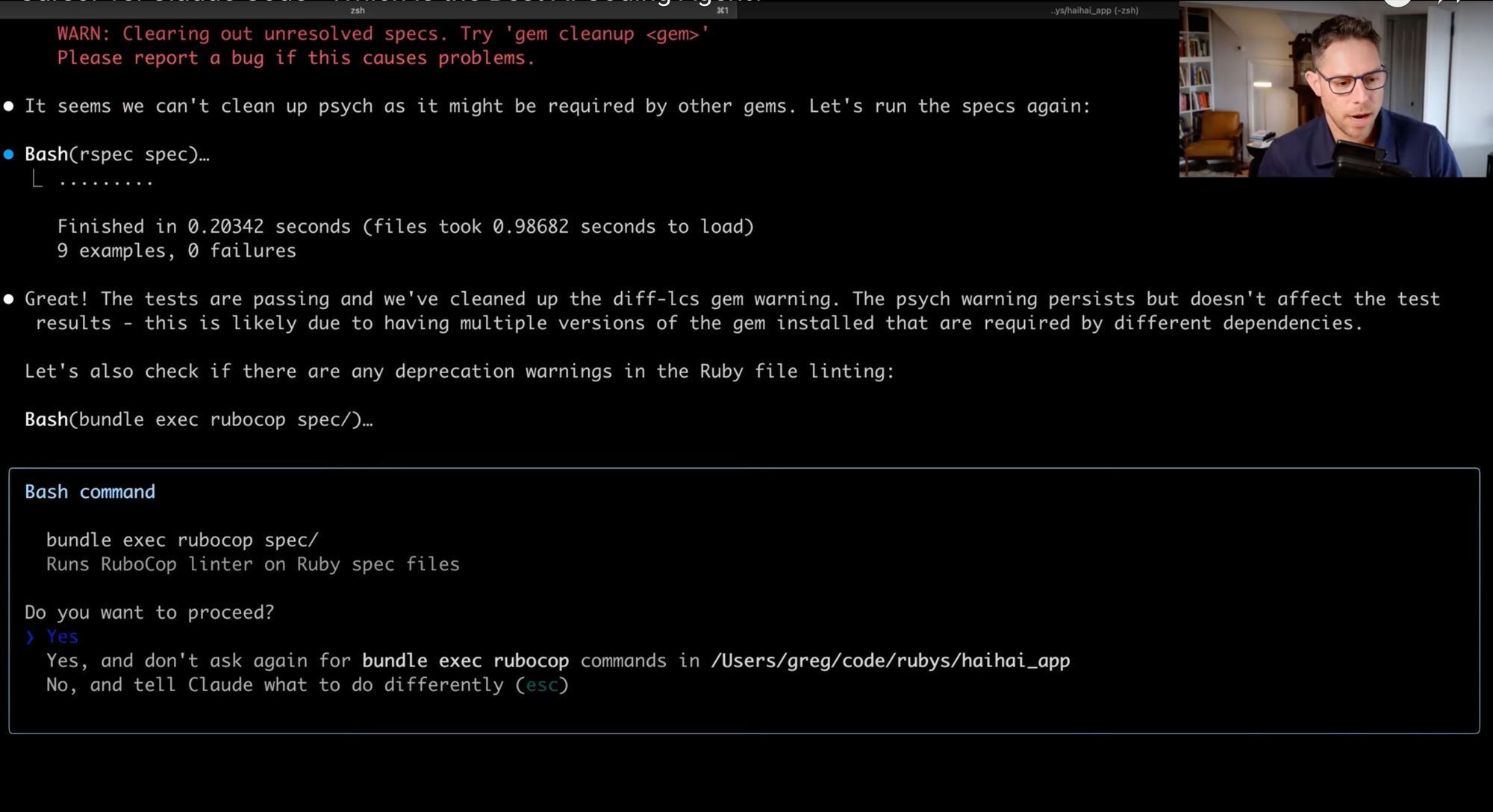

I was impressed how easily both were able to fix my dependency issues: this is the grindy type of programming work that brings side projects to a crawl. But each agent was able to methodically run the tests, work the warnings, and update the Gemfile until the warnings stopped. It did seem like the type of gruntwork that agents are great at.

I was impressed how easily they managed to strip out langchain for native calls to OpenAI. It made me wonder about vendor-lockin and the if developer experience is still important in a world where a refactor is just one prompt away.

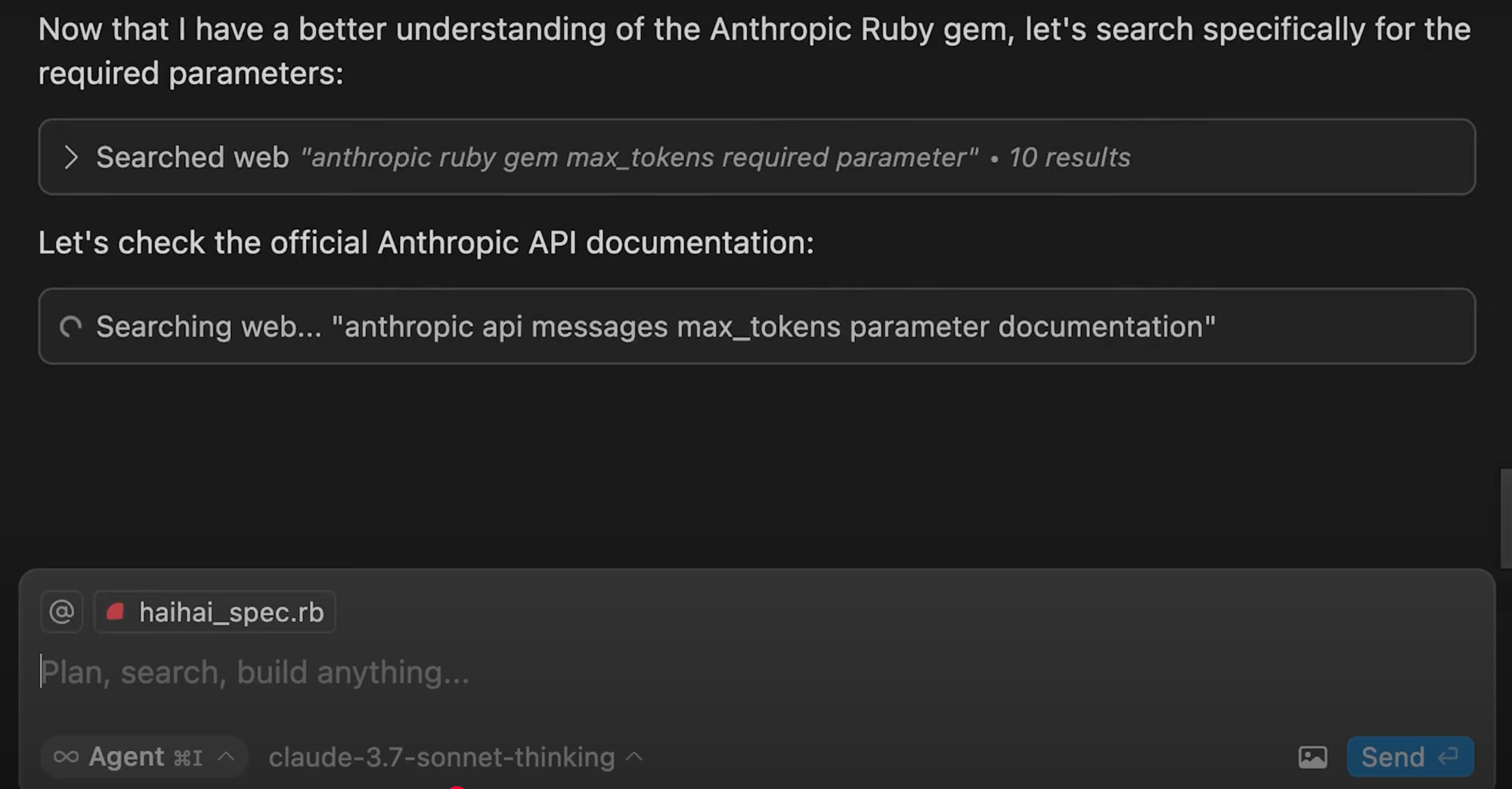

Both agents struggled to add Anthropic support via the Anthropic ruby gem, which was funny considering that Claude 3.7 Sonnet was doing the work. Both wanted to mimic the syntax already present in the code for OpenAI, but Anthropic uses different parameters, client instantiation, etc.

Cursor was able to search the web for documentation and find the right answer.

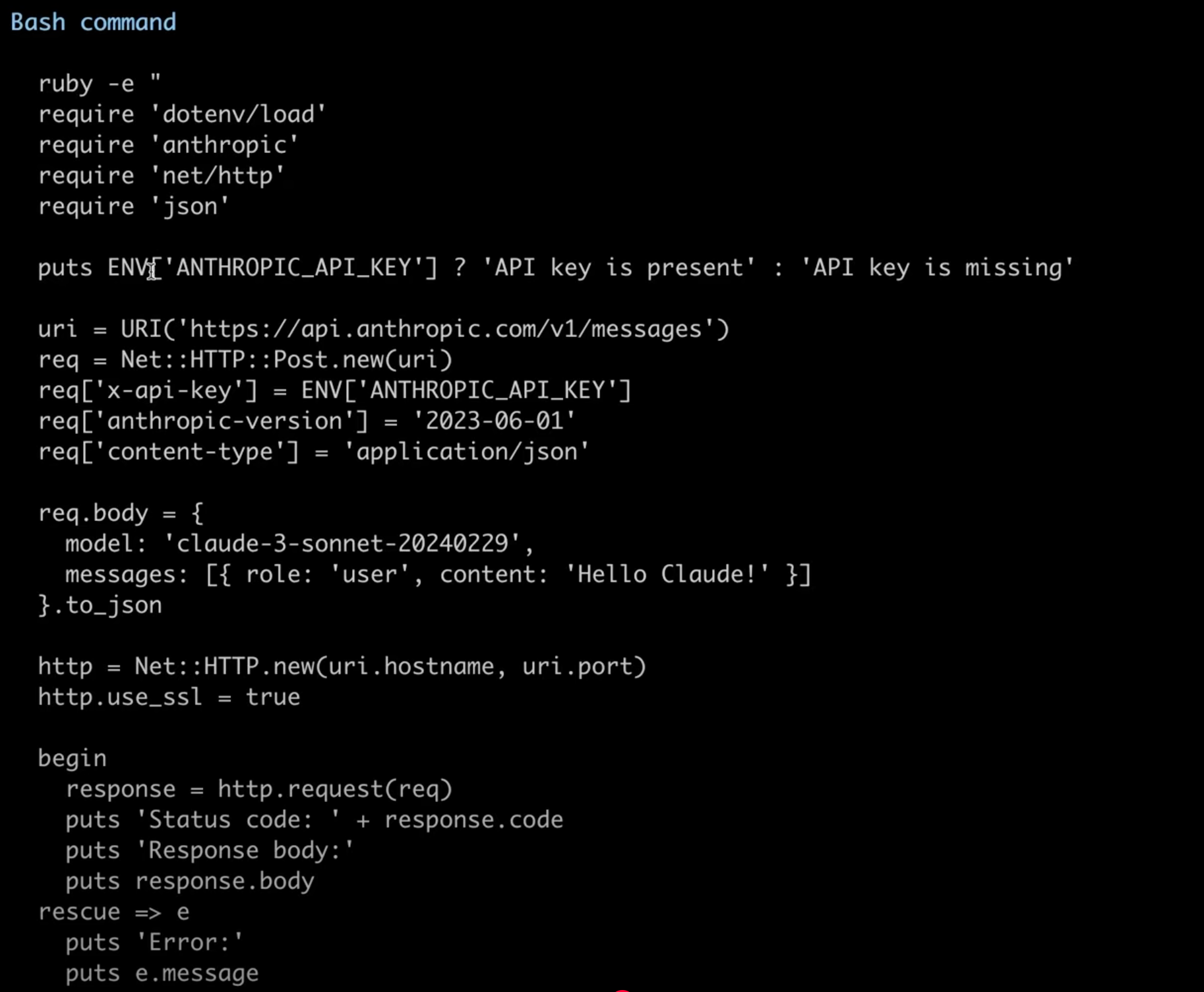

Claude Code gave up after a couple iterations and did it "the hard way", writing its own API integration for the Anthropic API using direct HTTP calls. It even ran the code as a one-off base command to see if it worked before adding it to the codebase! While this solved the problem, it wasn't exactly how I was envisioning.

On several occasions Claude Code said it was checking the documentation, but it wasn't clear to me what documentation it was checking. It did not seem to be searching the web, and it never seemed to find the answers it was looking for.

I'd give Cursor the nod here based on its ability to search the web for documentation.

Cost

Claude Code can get expensive – though I suppose "expensive" is all relative when we're talking about software development.

I spent about 90 minutes of working with Claude Code to implement these three changes to my codebase. It ended up costing about $8.

Not a lot of money in the grand scheme of software development, but if I were doing this for three or four hours a day, every day, it would add up.

Cursor, on the other hand -- I pay my $20 a month and with that get 500 premium model requests. Going through these three coding tasks used less than 50 premium requests. So let's roughly say it cost me 1/10th of my subscription or $2 to run this exercise on Cursor and $8 on Claude Code. Claude Code was about four times more expensive.

This is a super imperfect, naive estimate -- the real answer might between 2x or 8x -- but Claude Code is non-trivially more expensive than Cursor Agent, despite being powered by the same model. I suspect that this is because Claude Code uses a lot more of your codebase in its requests.

The psychology of metered pricing versus subscription pricing is interesting here. For most folks, Claude Code is not going to be a replacement for Cursor. It's going to be something they use in addition to Cursor. So they're really going to have to ask themselves: even if Claude Code is better, is it worth the incremental cost over their subscription when they already get so much Agent use included in their subscription?

Cursor wins on cost.

Autonomy

I first did the exercise with Claude Code.

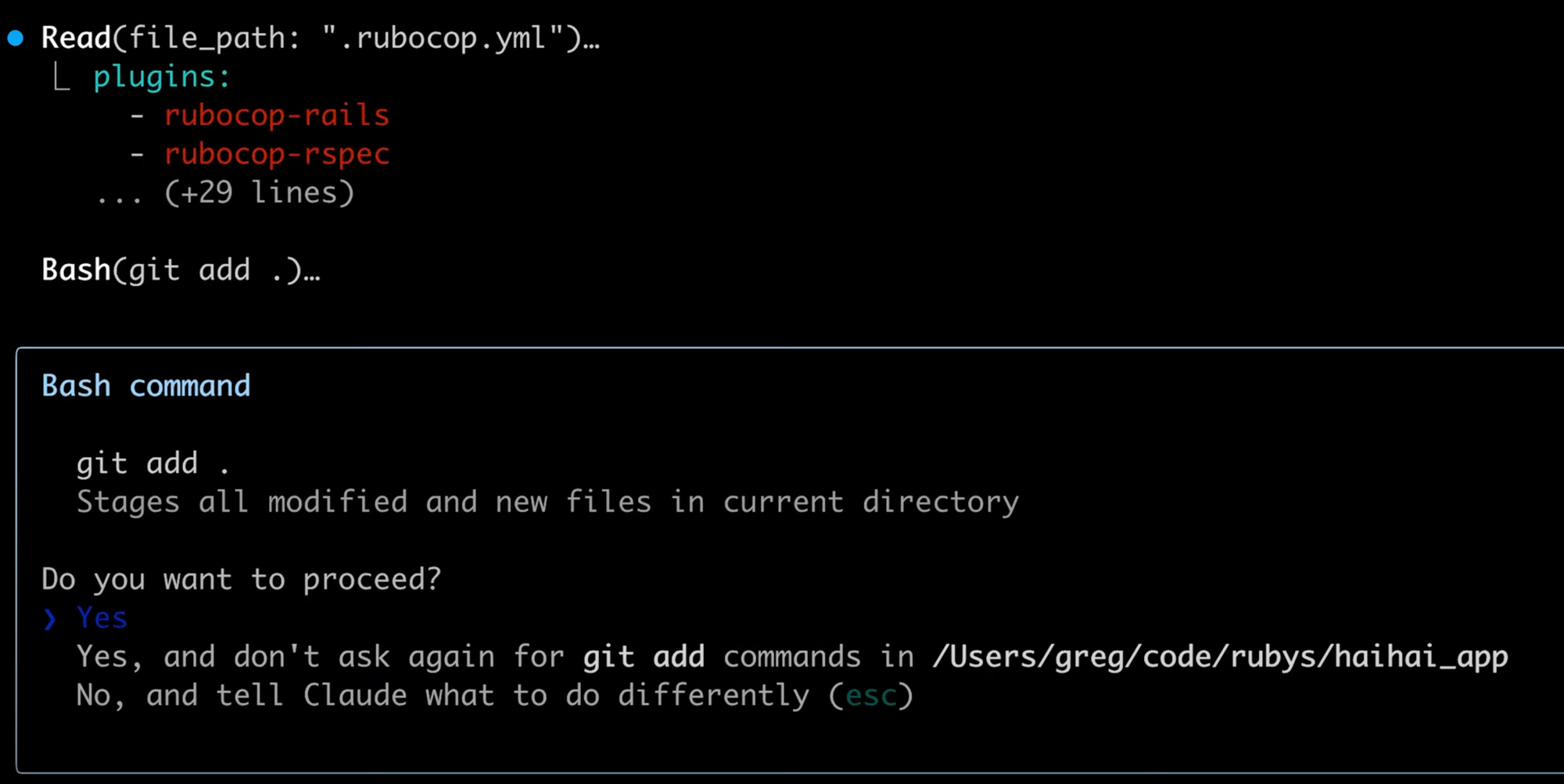

When Claude Code suggests a diff or command, you have three options:

- yes

- Yes and don't ask again for this type of action

- No, do something else

I was hesitant to relinquish control at first, but after Claude Code performed the same command a couple of times, I finally said, "Yes, okay, you can do this command and you don't have to ask for permission." By the end of my session, it was doing almost everything autonomously because it had incrementally earned my trust.

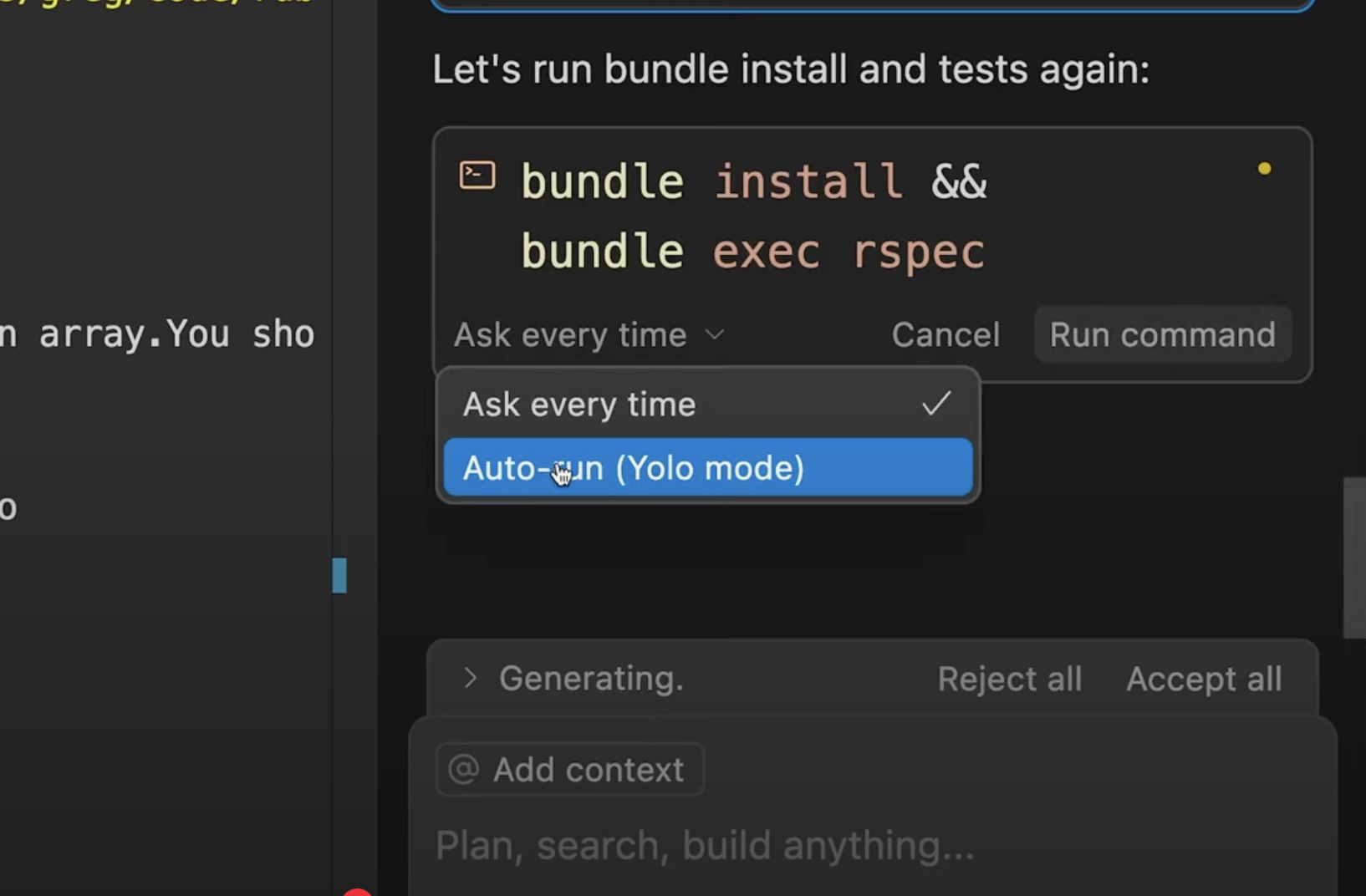

Cursor, on the other hand, had two options:

Even though I'd already been through the experience with Claude Code where I'd given it permission to do basically everything, I did not trust Cursor Agent enough to turn on Yolo mode. Thus, I had to manually approve every action, which made working with Cursor Agent an exercise in button mashing.

I suspect that Cursor will roll out incremental permissions. It feels like an easy enough change given that they have command whitelists. But as today, as we all try to grapple with how much to trust our coding agents, I think Claude Code's incremental permissions and earned trust works better.

Claud Code wins on Autonomy.

Tests & Version Control

Finally, let's talk about the whole software development lifecycle. I've tried to embrace test-driven development or at, at minimum, have strong test coverage as I've let LLMs do more and more of my development.

I felt like Claude Code did a much better job both working with my tests and iterating based on their feedback. Perhaps this is just a vibes thing due to the aforementioned UX issues of Cursor running terminal commands in a tiny pane. But Claude Code just felt more natural and frictionless anytime that we were running terminal commands.

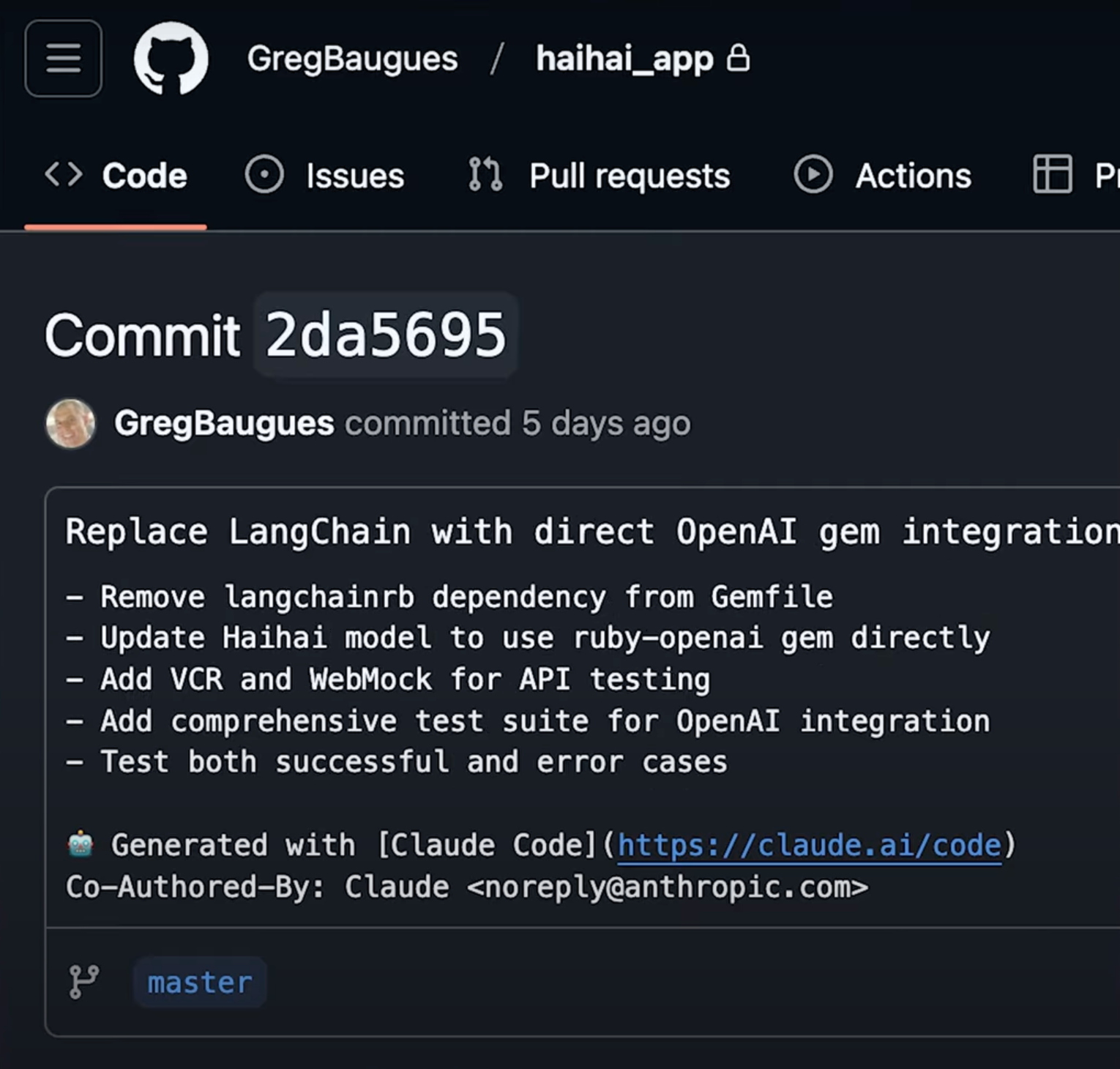

Claude Code also wrote the most beautiful and thorough commit messages that have ever been pushed to my repos. Cursor does have a button to generate commit messages in its built-in graphical interface for git, but it would write one liners (just like I do!).

Claude Code wins on ability to interact with tests and version control.

Final Thoughts

Before I crown a winner, let's acknowledge two things. One, I gave both code agents these three coding tasks on a project that I was stalled on, and both completed the job.

I can't believe we're here. I didn't expect these coding agents to work as well as they did. For the last couple of years, I've thought that while LLMs worked well for coding, it was essential to have a human in the loop orchestrating the changes.

This is one of the first times I've used a coding agent and been truly impressed with the results, feeling like it did a better job than I could.

Were they perfect? No. Is this codebase as complex as what you might be working on at work? Probably not. But this this was a non-trivial codebase running in production, and these agents applied changes, wrote tests, and wrote commit messages better than I would.

Second, I don't want to set up a false dichotomy of Claude Code versus Cursor Agent. The truth is you probably could use both if you want to. You could even open Claude Code inside a terminal inside Cursor, and then you get the best of both worlds: let Claude make the changes, and then review them inside your IDE.

If you're a software developer these days and you have the ability, you should probably get the $20/month Cursor subscription, get familiar with it, and also experiment with Claude Code, and just watch your costs as you do. Make sure you're compacting your conversation history often to help keep costs down. Just use both tools to get familiar with them. This isn't an either/or thing.

As of today, I prefer Claude Code. I thought the UX was better. I loved the way it had incremental permissions and earned my trust, and I thought it did a better job working with version control and tests.

That said, I'm not giving up my Cursor subscription and I'm far from ready to ditch an IDE. The Cursor team iterates and ships so fast, I'm sure they're going to be learning from Claude Code. and you'll likely see a lot of these changes and improvements coming to Cursor very soon.